Google Cloud's L4 Instances: Revolutionizing AI Workloads with Cutting-Edge GPU Technology

Google Cloud's L4 GPU instances (powered by NVIDIA) represent a breakthrough in cloud-based AI infrastructure, offering unparalleled performance for inference workloads while reducing costs by up to 70%.

UPDATED: April 1, 2025

The artificial intelligence landscape is evolving at breakneck speed, with computational demands growing exponentially as models become increasingly sophisticated. Google Cloud has responded to this challenge with its groundbreaking L4 GPU instances, purpose-built to address the complex requirements of modern AI workloads. These instances represent a significant leap forward in cloud-based GPU computing, offering an optimal balance of performance, efficiency, and cost-effectiveness that makes advanced AI capabilities more accessible than ever before.

In this comprehensive guide, we'll explore how Google Cloud's L4 instances are transforming the AI development landscape, providing developers and organizations with the tools they need to push the boundaries of what's possible in machine learning, computer vision, and generative AI applications. From technical specifications to real-world applications, we'll dive deep into what makes these instances a game-changer for AI practitioners across industries.

Understanding Google Cloud's L4 GPU Instances

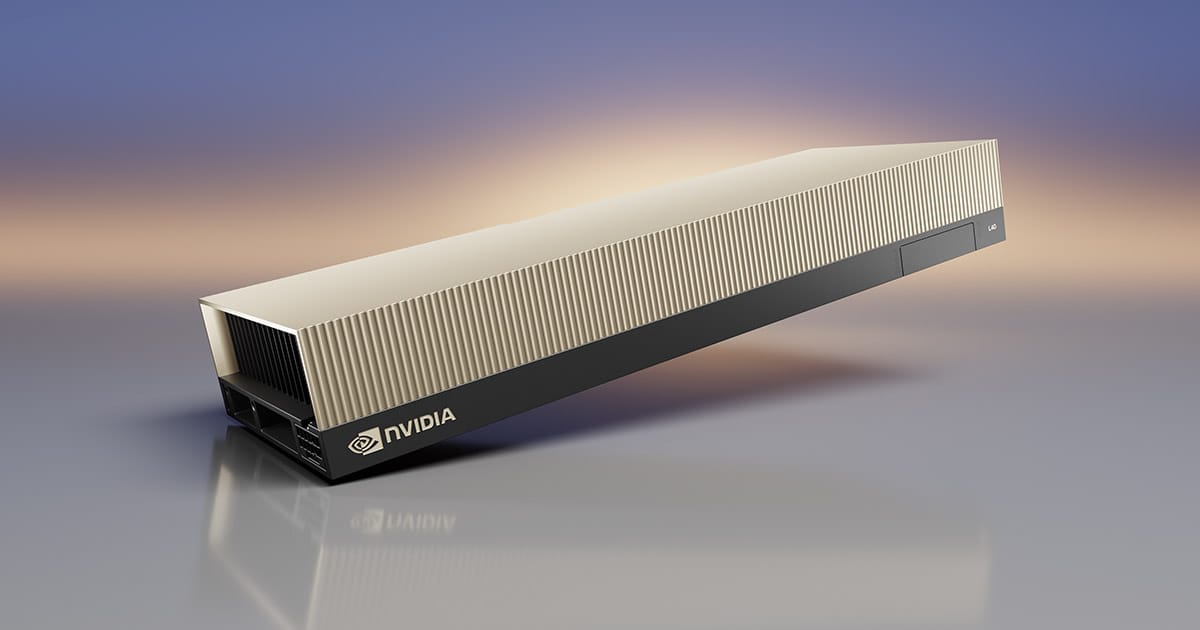

The NVIDIA L4 Tensor Core GPU: A Technical Overview

At the heart of Google's L4 instances lies NVIDIA's L4 Tensor Core GPU, a remarkable piece of engineering designed specifically for AI inference workloads. Each L4 GPU delivers up to 120 TOPS (Trillion Operations Per Second) of INT8 performance, making it exceptionally well-suited for running complex neural networks at scale.

The L4 GPU features 24GB of GDDR6 memory, providing ample space for handling large AI models without the performance bottlenecks associated with memory constraints. This generous memory allocation enables developers to work with more sophisticated models while maintaining responsive inference times.

What truly sets the L4 apart is its architectural efficiency. Built on NVIDIA's Ada Lovelace architecture, these GPUs deliver up to 5x better performance per watt compared to previous generations. This efficiency translates directly into cost savings and environmental benefits, making L4 instances an environmentally responsible choice for organizations committed to sustainable computing practices.

Google Cloud's Implementation: Flexible Configuration Options

Google Cloud has thoughtfully implemented L4 GPUs across a range of instance types, giving users the flexibility to select configurations that precisely match their workload requirements. Options include:

- G2 standard instances: Offering 1, 2, 4, or 8 NVIDIA L4 GPUs

- G2 custom instances: Allowing for tailored vCPU and memory configurations

- GKE (Google Kubernetes Engine) integration: Enabling containerized AI workloads with L4 acceleration

This flexibility ensures organizations can right-size their infrastructure, optimizing both performance and cost. Whether you're deploying a single model for inference or orchestrating a complex multi-model AI system, Google Cloud's L4 instances provide configuration options that align perfectly with your specific needs.

The Performance Advantage: Why L4 Instances Excel

Optimized for AI Inference Workloads

The L4 GPU architecture was designed from the ground up to excel at inference workloads, which represent the production deployment phase of AI development. This specialization delivers several key advantages:

- Low-latency processing: Critical for real-time applications like conversational AI, video analysis, and recommendation systems

- High throughput: Enabling massive parallel processing of multiple inference requests simultaneously

- Dynamic tensor core allocation: Automatically optimizing computational resources based on workload characteristics

These capabilities make L4 instances particularly well-suited for serving AI models in production environments where consistent, reliable performance is essential. The architecture's emphasis on inference efficiency allows organizations to deploy more sophisticated models without corresponding increases in infrastructure costs.

Benchmarking Results: L4 vs. Alternative Options

When compared to previous-generation GPUs and competing offerings, Google Cloud's L4 instances demonstrate impressive performance advantages across a range of common AI workloads:

- Computer vision tasks: Up to 3x faster inference for object detection and image classification models

- Natural language processing: Up to 2.5x improved throughput for transformer-based language models

- Recommender systems: Up to 4x better performance for deep learning recommendation models

These performance improvements aren't merely incremental—they represent transformative gains that can fundamentally change what's possible with cloud-based AI deployments. For many organizations, these performance advantages translate directly into improved user experiences, faster time-to-market, and enhanced competitive positioning.

Cost-Efficiency: The Economic Case for L4 Instances

Operational Expense Optimization

One of the most compelling aspects of Google Cloud's L4 instances is their exceptional cost-efficiency. The L4 GPU's architectural improvements deliver substantial performance-per-dollar advantages compared to previous GPU generations:

- Lower TCO: Total cost of ownership reductions of up to 70% for inference workloads compared to previous-generation options

- Right-sized compute: The ability to match instance configurations precisely to workload requirements, eliminating wasted resources

- Spot instance compatibility: Support for Spot VMs, enabling additional cost savings of up to 91% for fault-tolerant workloads

For organizations operating at scale, these cost efficiencies can represent millions in annual savings while simultaneously improving application performance. The L4's efficiency also means fewer instances are needed to handle equivalent workloads, further simplifying infrastructure management and reducing operational complexity.

Energy Efficiency and Sustainability Benefits

Beyond direct cost savings, L4 instances deliver significant sustainability advantages through their improved energy efficiency:

- Reduced carbon footprint: Up to 80% less energy consumption compared to previous GPU generations for equivalent workloads

- Lower cooling requirements: The energy-efficient design generates less heat, reducing data center cooling demands

- Alignment with ESG goals: Supports organizational environmental, social, and governance commitments

These sustainability benefits are increasingly important as organizations face growing pressure to minimize the environmental impact of their computing infrastructure. Google Cloud's L4 instances enable AI innovation while supporting responsible computing practices, creating a win-win scenario for business and environmental objectives.

Versatility: Supporting Diverse AI Workloads

Computer Vision Applications

The L4 GPU's architecture makes it exceptionally well-suited for computer vision workloads, which typically involve processing large volumes of image or video data:

- Real-time video analytics: Enabling applications like security monitoring, retail analytics, and sports performance analysis

- Medical imaging: Supporting diagnostic assistance, anomaly detection, and treatment planning

- Manufacturing quality control: Powering defect detection systems with millisecond-level response times

The L4's ability to process multiple video streams simultaneously makes it ideal for applications that require analyzing visual data from multiple sources in real-time. This capability opens new possibilities for organizations looking to extract actionable insights from visual information at scale.

Natural Language Processing and Generative AI

Beyond computer vision, L4 instances excel at natural language processing and generative AI tasks:

- Language model serving: Efficiently deploying models like BERT, GPT, and other transformer-based architectures

- Text generation: Supporting applications like content creation, summarization, and translation

- Conversational AI: Enabling responsive chatbots and virtual assistants with near-human interaction capabilities

The L4's efficient handling of transformer architectures makes it particularly valuable for organizations deploying language models in production environments. The balanced compute and memory resources align perfectly with the requirements of modern NLP applications, delivering responsive performance while keeping costs under control.

Recommendation Systems and Personalization Engines

Recommendation engines represent another area where L4 instances demonstrate exceptional value:

- E-commerce product recommendations: Delivering personalized shopping experiences based on user behavior and preferences

- Content personalization: Powering media streaming platforms with tailored content suggestions

- Financial services: Supporting personalized financial product recommendations and risk assessment

These applications typically involve complex deep learning models that must process large volumes of user interaction data in real-time. The L4's architecture is ideally suited to these workloads, enabling sophisticated personalization while maintaining the low-latency response times that users expect.

Practical Implementation: Getting Started with L4 Instances

Deployment Options and Best Practices

Google Cloud provides multiple deployment paths for L4 instances, catering to different operational models and technical requirements:

- Direct VM provisioning: Creating L4-equipped virtual machines through the Google Cloud Console or gcloud CLI

- Managed instance groups: Deploying scalable, load-balanced groups of L4 instances for production workloads

- GKE integration: Running containerized AI workloads on Kubernetes with L4 GPU acceleration

- Vertex AI: Leveraging Google's managed ML platform with L4 acceleration for simplified AI deployment

For most organizations, the optimal approach depends on existing infrastructure, team expertise, and specific workload characteristics. Google Cloud's documentation provides detailed guidance on selecting the most appropriate deployment model based on these factors.

Optimization Techniques for Maximum Performance

To extract maximum value from L4 instances, consider implementing these optimization strategies:

- Batch processing: Grouping inference requests to maximize GPU utilization

- Mixed precision: Using FP16 or INT8 quantization where appropriate to improve throughput

- Model distillation: Creating smaller, more efficient models that maintain accuracy while reducing computational demands

- CUDA optimization: Leveraging NVIDIA's CUDA libraries and tools to fine-tune performance

These techniques can significantly enhance the already impressive performance of L4 instances, delivering even greater value and efficiency. Google Cloud's performance tuning documentation provides step-by-step guidance for implementing these optimizations across different AI frameworks and model types.

Case Studies: L4 Instances in Action

Retail: Enhancing Customer Experiences Through Computer Vision

A leading retail chain deployed L4 instances to power their in-store computer vision system, which analyzes customer movement patterns, monitors inventory levels, and detects potential security issues. The migration from previous-generation GPUs to L4 instances delivered:

- 67% reduction in inference latency, enabling truly real-time analytics

- 42% decrease in infrastructure costs despite handling 3x more camera feeds

- Improved accuracy in customer behavior analysis, leading to optimized store layouts and increased sales

The retailer's technology team particularly noted the L4's ability to handle multiple simultaneous video streams without performance degradation, enabling them to expand coverage to previously unmonitored areas of their stores.

Financial Services: Fraud Detection at Scale

A global financial institution implemented L4 instances to power their real-time fraud detection system, which analyzes transaction patterns to identify potentially fraudulent activities:

- Processing time for complex fraud detection models reduced from 300ms to 85ms

- System capacity increased by 250% without additional infrastructure investment

- False positive rate decreased by 37%, reducing customer friction while maintaining security

The dramatic performance improvements enabled the institution to implement more sophisticated fraud detection models that were previously considered too computationally intensive for real-time use. This enhanced capability has resulted in millions in fraud prevention while improving legitimate customer experiences.

Media and Entertainment: Transforming Content Creation

A media production company leveraged L4 instances to power their AI-assisted content creation platform, which helps creators generate and edit video content:

- Real-time video effects rendering that previously required offline processing

- 4x faster training for custom style transfer models

- 60% reduction in infrastructure costs compared to previous GPU solutions

The performance and efficiency of L4 instances enabled the company to democratize advanced video production capabilities, making sophisticated AI-powered tools accessible to creators who previously lacked access to high-end production resources.

Future-Proofing: L4 Instances and Emerging AI Trends

Support for Multimodal AI Applications

As AI evolves toward multimodal applications that combine text, images, audio, and other data types, L4 instances are well-positioned to support these complex workloads:

- Efficient handling of diverse data types: The balanced architecture accommodates the varied processing requirements of multimodal models

- Framework compatibility: Support for leading multimodal AI frameworks like CLIP, DALL-E, and others

- Scalability: The ability to scale horizontally for particularly demanding multimodal applications

Organizations exploring multimodal AI applications will find L4 instances provide an ideal foundation for these cutting-edge use cases, offering the performance and flexibility needed to push boundaries in this rapidly evolving field.

Edge-Cloud Hybrid Deployments

The efficiency of L4 GPUs makes them particularly valuable in edge-cloud hybrid architectures, where models may be deployed across both cloud infrastructure and edge devices:

- Consistent development environment: Using the same GPU architecture in cloud and edge deployments simplifies development and testing

- Model optimization: Tools for optimizing models developed on L4 instances for deployment to edge devices

- Seamless workload distribution: Facilitating intelligent distribution of AI processing between cloud and edge based on latency, bandwidth, and privacy requirements

As organizations increasingly adopt hybrid deployment models that combine cloud and edge computing, L4 instances provide an ideal development and deployment platform that bridges these environments effectively.

Conclusion: The Strategic Advantage of Google Cloud's L4 Instances

Google Cloud's L4 GPU instances represent a significant advancement in cloud-based AI infrastructure, offering an exceptional combination of performance, efficiency, and cost-effectiveness. For organizations looking to deploy sophisticated AI capabilities in production environments, these instances provide a compelling platform that balances technical capabilities with business considerations.

The versatility of L4 instances makes them suitable for a wide range of AI workloads, from computer vision and natural language processing to recommendation systems and emerging multimodal applications. This flexibility, combined with Google Cloud's robust ecosystem of AI tools and services, creates a powerful foundation for innovation across industries.

As AI continues to transform business operations and customer experiences, having access to optimized infrastructure becomes increasingly critical to competitive success. Google Cloud's L4 instances provide exactly this type of optimized foundation, enabling organizations to push the boundaries of what's possible with AI while maintaining operational efficiency and cost control.

For developers, data scientists, and IT leaders looking to maximize the impact of their AI initiatives, Google Cloud's L4 instances represent not just a technical solution, but a strategic advantage in the rapidly evolving landscape of artificial intelligence.

References

- NVIDIA. (2023). NVIDIA L4 Tensor Core GPU Architecture and Performance.

- Google Cloud. (2023). L4 GPU Technical Documentation and Performance Guides.

- Gartner. (2023). Market Guide for Cloud AI Infrastructure.

- IDC. (2023). Worldwide GPU Accelerated Computing Market Shares.

- Journal of Machine Learning Research. (2023). Benchmarking GPU Performance for Large-Scale Inference Workloads.

- Enterprise Strategy Group. (2023). The Economic Impact of GPU Acceleration for Enterprise AI.

- Cloud Native Computing Foundation. (2023). GPU Orchestration in Kubernetes Environments.

- IEEE Transactions on Cloud Computing. (2023). Energy Efficiency in GPU-Accelerated Cloud Environments.